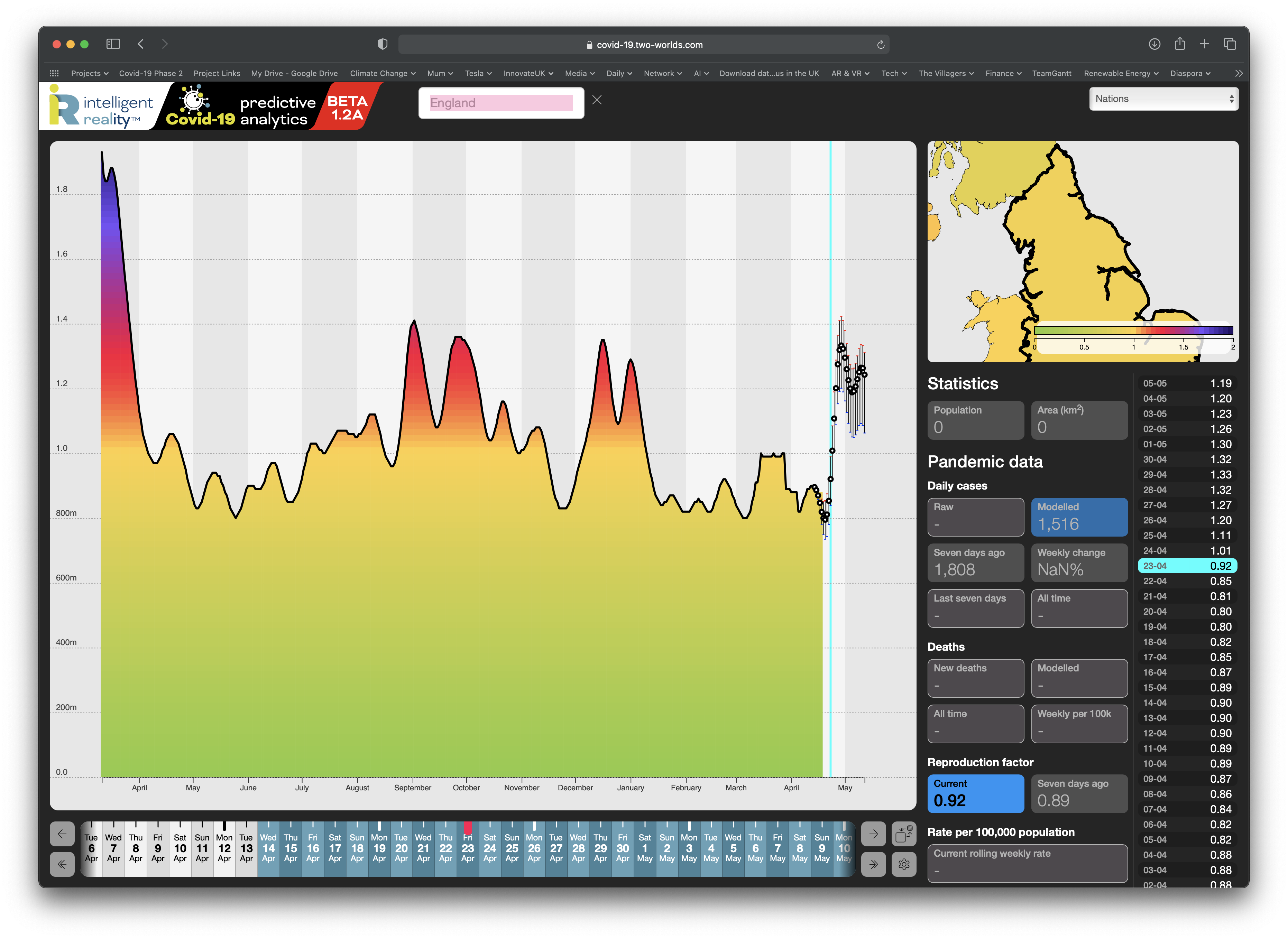

SAGE announced today that England\’s R number has risen across to between 0.8 and 1. They update their pronouncements once a week, based on their modelling from data that\’s even further behind.

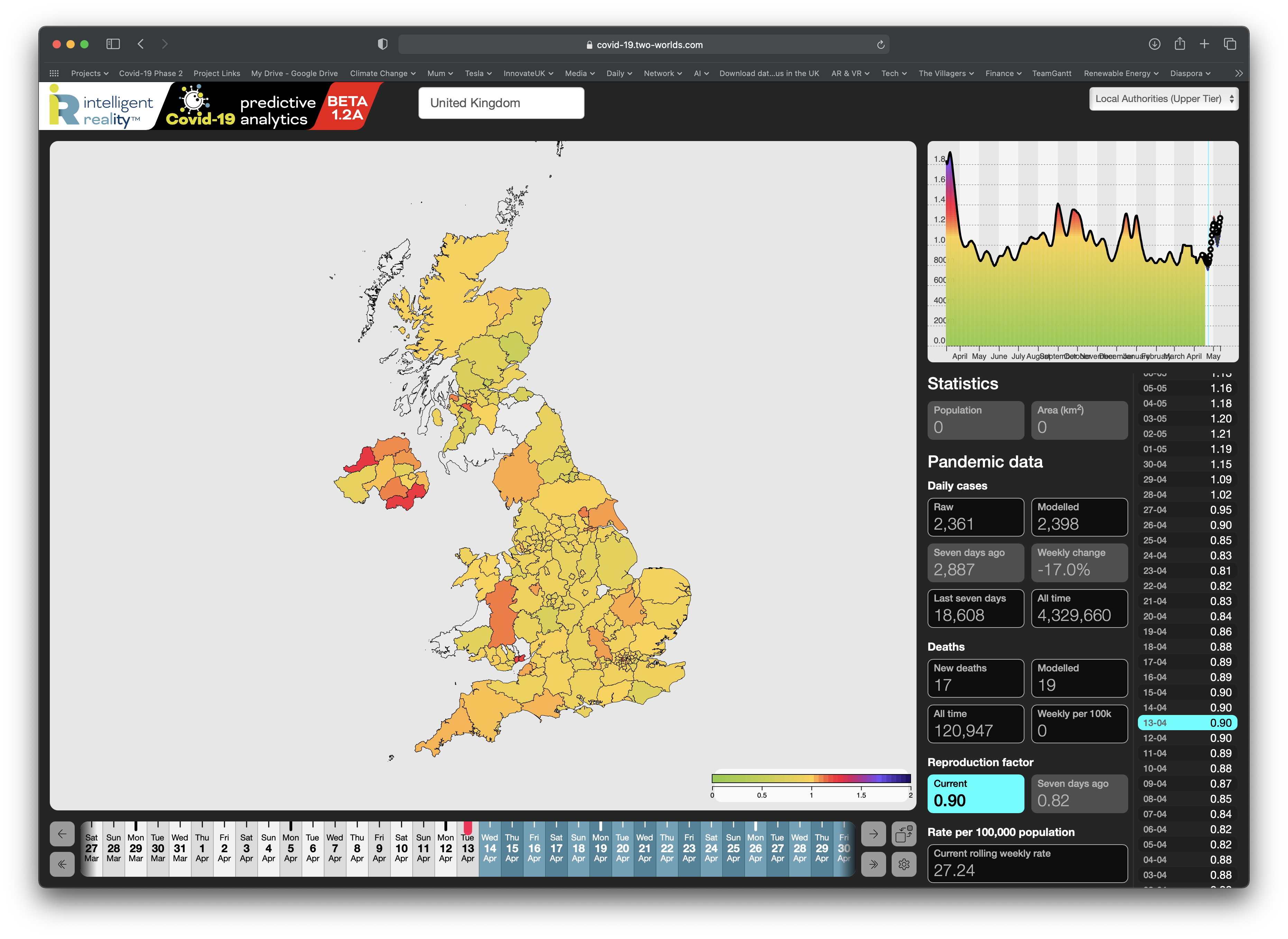

We take a different approach: we use emergent and inferential analysis to generate R number calculations and 28-day forecasts, on a daily basis, for every local authority in the UK.

We can say that England, as of today, is at an R number of around 0.92, up from a low of 0.80 on 19 April. Our forecasting suggests that it\’s going to go over 1.0 from tomorrow, reaching roughly 1.3 by the end of the month, with England leading the way, followed by Wales and Northern Ireland, with Scotland doing rather better, for the moment at least.

The important thing though is not the country level data but what\’s happening locally: If I were making policy for the West Country, Yorkshire, Cumbria or Northern Ireland, I\’d be considering taking action, now. Do bear in mind though that absolute case numbers are still low, albeit rising, so it doesn\’t take many new cases to drive the R number up – it\’s not a metric that\’s hugely useful locally at low levels of the pandemic, which is why it\’s only one of a range of attributes we use to drive our forecasting.

But this does demonstrate how far behind the curve the government is, largely through its reliance on cumbersome and lagging modelling, for both core analysis and the discovery of clusters and correlations that may flag changing patterns and vulnerabilities.

The irony is that our inferential approach and the statistical/hypothesis-based approach apparently in use by others are complementary, not contradictory: ours surfaces patterns and trends very rapidly, enabling us to create timely forecasts and to generate clusters and correlations, but does not attempt to address causality: that\’s where hypothesis-driven models come into their own. What the latter aren\’t good for is high-speed discovery of the stuff you need to know but don\’t know to even ask for, until too long after the event.

When you\’re dealing with a fast moving pandemic, you can\’t be making decisions based on analysis of what was happening a fortnight ago.

So how often do we have to say this? How long does it take to get the message across that the world of analytics has moved on from the rigid and deterministic models of yesteryear, and that this is the time when we need to building state of the art capability for both the now and the future.

Every country needs a full time data collection and analytic infrastructure that provides both insight and forecasting to an actionable level in a genuinely timely manner, not just for the pandemic but to support a wide range of public health and other societal issues, not least of which is Climate Change. Most places simply don\’t have such an infrastructure ( and here, the UK is just one, forewarned but desperately complacent, example amongst many). And this pandemic itself is not over, by a long shot, and the nature of the modern world tells us that it won\’t be the last.

But until governments choose to invest in both robust data collection infrastructures and in analytic approaches that belong to the current century, policy decisions are doomed to remain as acts of slamming the stable door whilst the horse is already over the next hill.